By: Picaud Vincent

Re-posted from: https://pixorblog.wordpress.com/2016/07/17/direct-convolution/

For small kernels, direct convolution beats FFT based one. I present here a basic implementation. This implementation allows to compute

From time to time we will use the notation .

An arbitrary stride has been introduced to define:

convolution

cross-correlation

the stationary wavelet transform (the so called “à trous” algorithm)

Also note that with proper boundary extension (periodic and zero padding essentially), changing the sign of gives the adjoint operator:

Disclaimer

Maybe the following is overwhelmingly detailed for a simple task like Eq. (1), but I have found some interests in writing this once for all. Maybe it can be useful for someone else.

Some notations

We note our vector domain (or support), for instance

means that is defined for

To get interval lower/upper bounds we use the notation

We denote by the scaled domain

defined by:

where and

Finally we use the relative complement of

with respect to the set

defined by

This set is not necessary connex, however like we are working in , it is sufficient to introduce the left and right parts (that can be empty)

Goal

Given two vectors ,

defined on

,

we want to define and implement an algorithm that computes

for

.

First step, no boundary extension

We need to define the the domain that does not violate

domain of definition. This can be expressed as

Let’s write the details,

hence we have

Thus the computation of (Eq. 1) is splitted into two parts:

- one part

free of boundary effect,

- one part

that requires boundary extension

The algorithm takes the following form:

Second step, boundary extensions

Usually we define some classical boundary extensions. These extensions are computed from and are sometimes entailed by a validity condition. For a better clarity I give explicit lower/upper bounds:

| Left boundary |

validity condition | |

|---|---|---|

| Mirror | ||

| Periodic (or cyclic) | ||

| Constant | none | |

| Zero padding | none |

| Right boundary |

validity condition | |

|---|---|---|

| Mirror | ||

| Periodic (or cyclic) | ||

| Constant | none | |

| Zero padding | none |

As we want something general we want to get rid of these validity conditions.

Periodic case

Starting from a vector defined on

we want to define a periodic function

of period

. This function must fulfills the

relation.

We can do that by considering where

and is the modulus function associated to a floored division.

For a vector defined on an arbitrary domain , we first translate the indices

and then translate them back using

Putting all together, we build a periodized vector

where

Mirror Symmetry case

Starting from a vector defined on

we can extend it by mirror symmetry on

using

with

The resulting vector fulfills the

relation for

.

To get a “global” definition we then periodize it on using

(attention

and not

, otherwise the component

is duplicated!).

For an arbitrary domain we use index translation as for the periodic case. Putting everything together we get:

where

Boundary extensions

To use the algorithm with boundary extensions, you only have to define:

where is the boundary extension you have chosen (periodic, constant

). You do not have to take care of any validity condition, these formula are general.

). You do not have to take care of any validity condition, these formula are general.

Implementation

This is a straightforward implementation following as close as possible the presented formula. We did not try to optimize it, this would have obscured the presentation. Some ideas: reverse for

(access memory in the right order), use simd, or C++ meta-programming with loop unrolling for fixed

size, specialize regarding to Vector/StridedVector or

Preamble

Index translation / domain definition

There is however one last thing we have to explain. In languages like Julia, C we are manipulating arrays having a common starting index:

we are manipulating arrays having a common starting index: in Julia, Fortran

or

or in C, C++

For this reason we do not manipulate on

but an another translated array

defined on

(Julia) or

(C++).

To cover all cases, I assume that the starting index is denoted by .

The array is defined by:

Hence we must modify the initiale Eq. (1) to use instead of

With we have

and

Thus, Eq (1) becomes:

The other arrays are less problematic:

- For

array, which is our input array, we implicitly use

. This does not reduce the generality of the subroutine.

- For

which is the output array, as for

we assume it is defined on

, but we provide

to define the components we want to compute. The other components,

, will remain unmodified by the subroutine.

Definition of

As we have seen before, the convolution subroutine will have as argument, but we also need

. For the driver subroutine we do not directly provide this interval because its length is redundant with

length. Instead we provide an

offset.

is deduced from:

Note: this definition does not depend on .

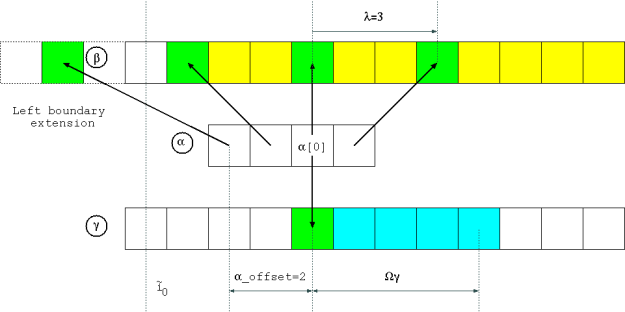

With you are in the “usual situation”. If you have a window size of

, taking

returns the middle of the window. Here, in the Fig. below, the graphical representation of an arbitrary case: a filter if size

, with

and

.

Julia

Auxiliary subroutines

We start by defining the basic operations on sets:

function scale(λ::Int64,Ω::UnitRange) ifelse(λ>0, UnitRange(λ*start(Ω),λ*last(Ω)), UnitRange(λ*last(Ω),λ*start(Ω))) end function compute_Ωγ1(Ωα::UnitRange, λ::Int64, Ωβ::UnitRange) λΩα = scale(λ,Ωα) UnitRange(start(Ωβ)-start(λΩα), last(Ωβ)-last(λΩα)) end # Left & Right relative complements A\B # function relelativeComplement_left(A::UnitRange, B::UnitRange) UnitRange(start(A), min(last(A),start(B)-1)) end function relelativeComplement_right(A::UnitRange, B::UnitRange) UnitRange(max(start(A),last(B)+1), last(A)) end

Boundary extensions

We then define the boundary extensions. Nothing special there, we only had to check that the Julia mod(x,y) function is the floored division version (by opposition to the rem(x,y) function which is the rounded toward zero division version).

const tilde_i0 = Int64(1) function boundaryExtension_zeroPadding{T}(β::StridedVector{T}, k::Int64) kmin = tilde_i0 kmax = length(β) + kmin - 1 if (k>=kmin)&&(k<=kmax) β[k] else T(0) end end function boundaryExtension_constant{T}(β::StridedVector{T}, k::Int64) kmin = tilde_i0 kmax = length(β) + kmin - 1 if k<kmin β[kmin] elseif k<=kmax β[k] else β[kmax] end end function boundaryExtension_periodic{T}(β::StridedVector{T}, k::Int64) kmin = tilde_i0 kmax = length(β) + kmin - 1 β[kmin+mod(k-kmin,1+kmax-kmin)] end function boundaryExtension_mirror{T}(β::StridedVector{T}, k::Int64) kmin = tilde_i0 kmax = length(β) + kmin - 1 β[kmax-abs(kmax-kmin-mod(k-kmin,2*(kmax-kmin)))] end # For the user interface # boundaryExtension = Dict(:ZeroPadding=>boundaryExtension_zeroPadding, :Constant=>boundaryExtension_constant, :Periodic=>boundaryExtension_periodic, :Mirror=>boundaryExtension_mirror)

Main subroutine

Finally we define the main subroutine. Its arguments have been defined in the preamble part. I just added one @simd & @inbounds because this has a significant impact concerning perfomance (see end of this post).

function direct_conv!{T}(tilde_α::StridedVector{T}, Ωα::UnitRange, λ::Int64, β::StridedVector{T}, γ::StridedVector{T}, Ωγ::UnitRange, LeftBoundary::Symbol, RightBoundary::Symbol) # Sanity check @assert λ!=0 @assert length(tilde_α)==length(Ωα) @assert (start(Ωγ)>=1)&&(last(Ωγ)<=length(γ)) # Initialization Ωβ = UnitRange(1,length(β)) tilde_Ωα = 1:length(Ωα) for k in Ωγ γ[k]=0 end rΩγ1=intersect(Ωγ,compute_Ωγ1(Ωα,λ,Ωβ)) # rΩγ1 part: no boundary effect # β_offset = λ*(start(Ωα)-tilde_i0) @simd for k in rΩγ1 for i in tilde_Ωα @inbounds γ[k]+=tilde_α[i]*β[k+λ*i+β_offset] end end # Left part # rΩγ1_left = relelativeComplement_left(Ωγ,rΩγ1) Φ_left = boundaryExtension[LeftBoundary] for k in rΩγ1_left for i in tilde_Ωα γ[k]+=tilde_α[i]*Φ_left(β,k+λ*i+β_offset) end end # Right part # rΩγ1_right = relelativeComplement_right(Ωγ,rΩγ1) Φ_right = boundaryExtension[RightBoundary] for k in rΩγ1_right for i in tilde_Ωα γ[k]+=tilde_α[i]*Φ_right(β,k+λ*i+β_offset) end end end # Some UI functions, γ inplace modification # function direct_conv!{T}(tilde_α::StridedVector{T}, α_offset::Int64, λ::Int64, β::StridedVector{T}, γ::StridedVector{T}, Ωγ::UnitRange, LeftBoundary::Symbol, RightBoundary::Symbol) Ωα = UnitRange(-α_offset, length(tilde_α)-α_offset-1) direct_conv!(tilde_α, Ωα, λ, β, γ, Ωγ, LeftBoundary, RightBoundary) end # Some UI functions, allocates γ # function direct_conv{T}(tilde_α::StridedVector{T}, α_offset::Int64, λ::Int64, β::StridedVector{T}, LeftBoundary::Symbol, RightBoundary::Symbol) γ = Array{T,1}(length(β)) direct_conv!(tilde_α, α_offset, λ, β, γ, UnitRange(1,length(γ)), LeftBoundary, RightBoundary) γ end

In C/C++

As this post is already long I will not provide a complete code here. The only trap is to use the right mod function.

C/C++ modulus operator % is not standardized. Only the D%d=D-d*(D/d) relation is invariant allowing to define the Euclidean division. On the other side a lot of CPU x86 idiv , truncate toward zero, as a consequence C/C++ generally uses this direction.

, truncate toward zero, as a consequence C/C++ generally uses this direction.

To be sure, we have to explicitly use our F-mod function:

// Floored mod int modF(int D, int d) { int r = std::fmod(D,d); if((r > 0 && d < 0) || (r < 0 && d > 0)) r = r + d; return r; }

You can read:

Usages examples

Basic usages

Beware that due to the asymmetric role of and

the proposed approach does preserve all the mathematical properties of the

operator.

- Commutativity:

only for ZeroPadding

- Adjoint operator:

only for ZeroPadding and Periodic

- I have assumed

arrays (not

ones): some conjugation are missing

- Not considered here, but extension to n-dimensional & separable filters is immediate

push!(LOAD_PATH,"./") using DirectConv α=rand(4); β=rand(10); # Check adjoint operator # -> restricted to ZeroPadding & Periodic # (asymmetric role of α and β) # vβ=rand(length(β)) d1=dot(direct_conv(α,2,-3,vβ,:ZeroPadding,:ZeroPadding),β) d2=dot(direct_conv(α,2,+3,β,:ZeroPadding,:ZeroPadding),vβ) @assert abs(d1-d2)<sqrt(eps()) d1=dot(direct_conv(α,-1,-3,vβ,:Periodic,:Periodic),β) d2=dot(direct_conv(α,-1,+3,β,:Periodic,:Periodic),vβ) @assert abs(d1-d2)<sqrt(eps()) # Check commutativity # -> λ = -1 (convolution) and # restricted to ZeroPadding # (asymmetric role of α and β) v1=zeros(20) v2=zeros(20) direct_conv!(α,0,-1, β,v1,UnitRange(1,20),:ZeroPadding,:ZeroPadding) direct_conv!(β,0,-1, α,v2,UnitRange(1,20),:ZeroPadding,:ZeroPadding) @assert (norm(v1-v2)<sqrt(eps())) # Check Interval splitting # (should work for any boundary extension type) # γ=direct_conv(α,3,2,β,:Mirror,:Periodic) # global computation Γ=zeros(length(γ)) Ω1=UnitRange(1:3) Ω2=UnitRange(4:length(γ)) direct_conv!(α,3,2,β,Γ,Ω1,:Mirror,:Periodic) # compute on Ω1 direct_conv!(α,3,2,β,Γ,Ω2,:Mirror,:Periodic) # compute on Ω2 @assert (norm(γ-Γ)<sqrt(eps()))

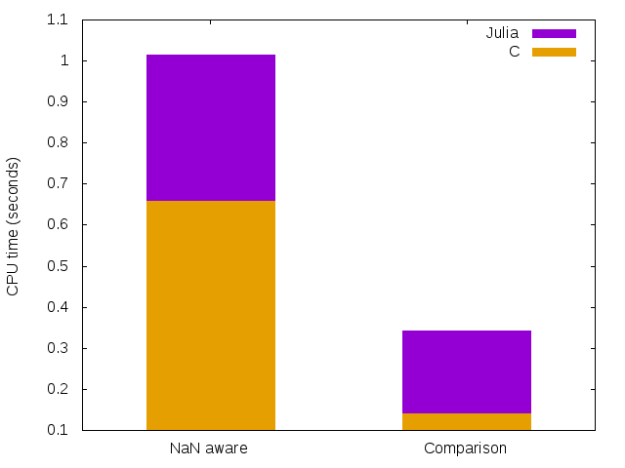

Performance?

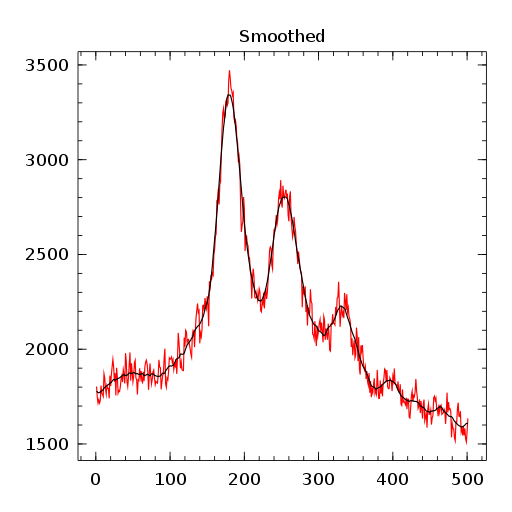

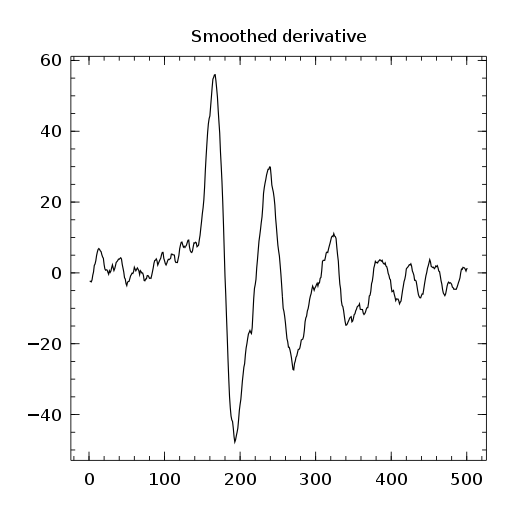

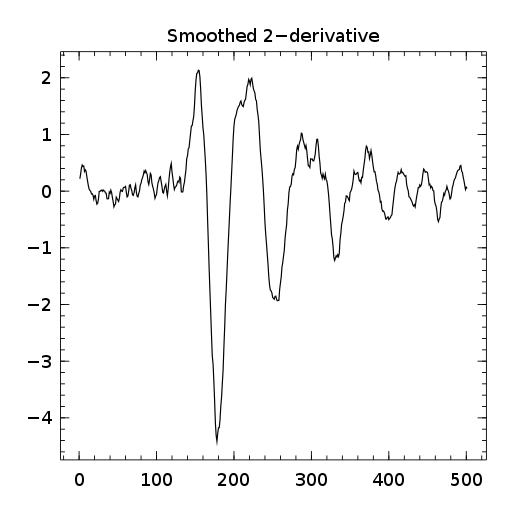

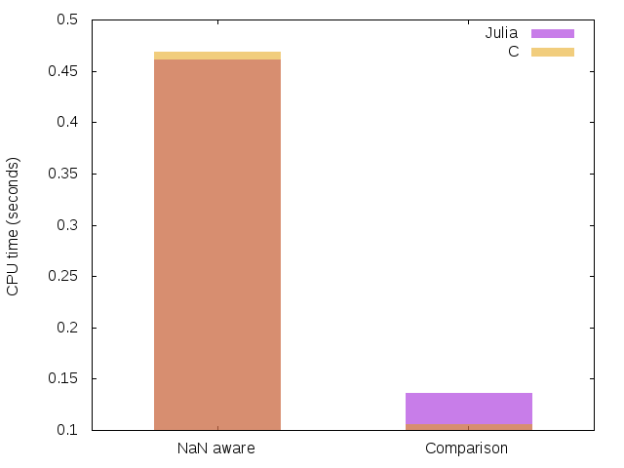

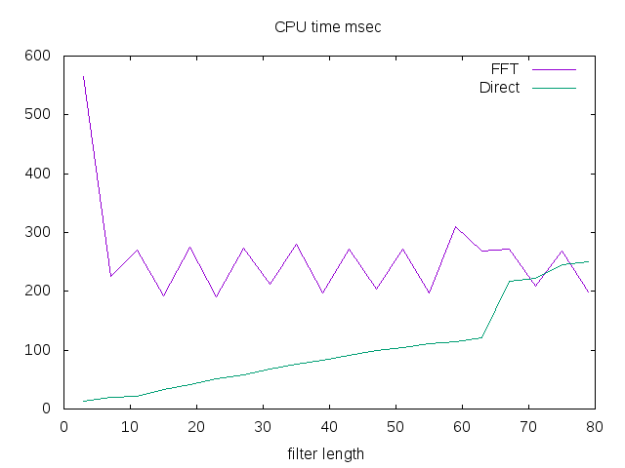

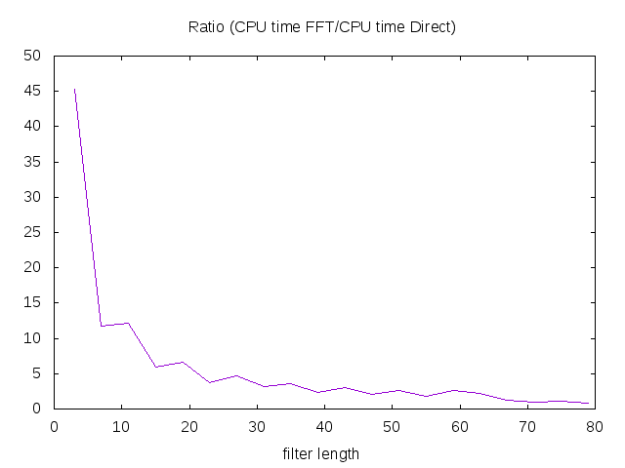

In a previous post I gave a short derivation of the Savitzky-Golay filters. I used a FFT based convolution to apply the filters. It is interesting to compare the performance of the presented direct approach vs the FFT one.

push!(LOAD_PATH,"./") using DirectConv function apply_filter{T}(filter::StridedVector{T},signal::StridedVector{T}) @assert isodd(length(filter)) halfWindow = round(Int,(length(filter)-1)/2) padded_signal = [signal[1]*ones(halfWindow); signal; signal[end]*ones(halfWindow)] filter_cross_signal = conv(filter[end:-1:1], padded_signal) filter_cross_signal[2*halfWindow+1:end-2*halfWindow] end # Now we can create a (very) rough benchmark M=Array(Float64,0,3) β=rand(1000000); for halfWidth in 1:2:40 α=rand(2*halfWidth+1); fft_t0 = time() fft_v = apply_filter(α,β) fft_t1 = time() direct_t0 = time() direct_v = direct_conv(α,halfWidth,1,β, :Constant,:Constant) direct_t1 = time() @assert (norm(fft_v -direct_v)<sqrt(eps())) M=vcat(M, Float64[length(α) (fft_t1-fft_t0)*1e3 (direct_t1-direct_t0)*1e3]) end M

We see that for small filters direct method can easily be 10 time faster than the FFT approach!

Conclusion: for small filters, use a direct approach!

Discussion

Optimization/performance

If I have time I will try to benchmark two basic implementations, a Julia one vs a C/C++ one. I’m a beginner in Julia language, with C++, I’m more at home.

I would be curious to see the difference between a basic implementation and an optimized one in Julia. Just to see how optimization can obfuscate (or not) the initial code and the performance gain. In C++ you generally have a lot of boiler-plate code (meta-programming ).

).

Applications

The basic Eq. (1) is common tool that can be used for:

- deconvolution procedures,

- decimated and undecimated wavelet transforms,

For wavelet transform especially the undecimated one, AFAIK Eq. (1) is really the good choice. I will certainly write some posts on these stuff.

Some extra reading:

- The FFT way: Algorithms for Efficient Computation of Convolution, K. Pavel

- The Winograd’s minimal filtering algorithms way: Fast Algorithms for Convolutional Neural Networks, A. Lavin, S. Gray

- The OpenCL/GUPU way: Case study: High performance convolution using OpenCL __local memory

Code

The code is on github.

Complement: more domains

The  domain

domain

We have introduced the domain that does not violate

domain of definition (given

and

).

To be exhaustive we can introduce the domain that use at least one

.

This domain is:

following arguments similar to those used for we get:

The  domain

domain

We can also ask for the “dual” question: given and

what is the domain of

,

, involved in the computation of

By definition, this domain must fulfill the following relation:

hence, using the previous result

which gives: